Table of contents

- What is WebRTC?

- What kind of security does WebRTC offer?

- What security methods do browsers offer?

- What security measures should the developer think about?

- Where to read more about WebRTC security

- Conclusion

Let's say you are a businessman and you want to develop a video conference or add a video chat to your program. How do you know what the developer has done is safe? What kind of protection can you promise your users? There are a lot of articles, but they are technical - it's hard to figure out the specifics of security. Let's explain in simple words.

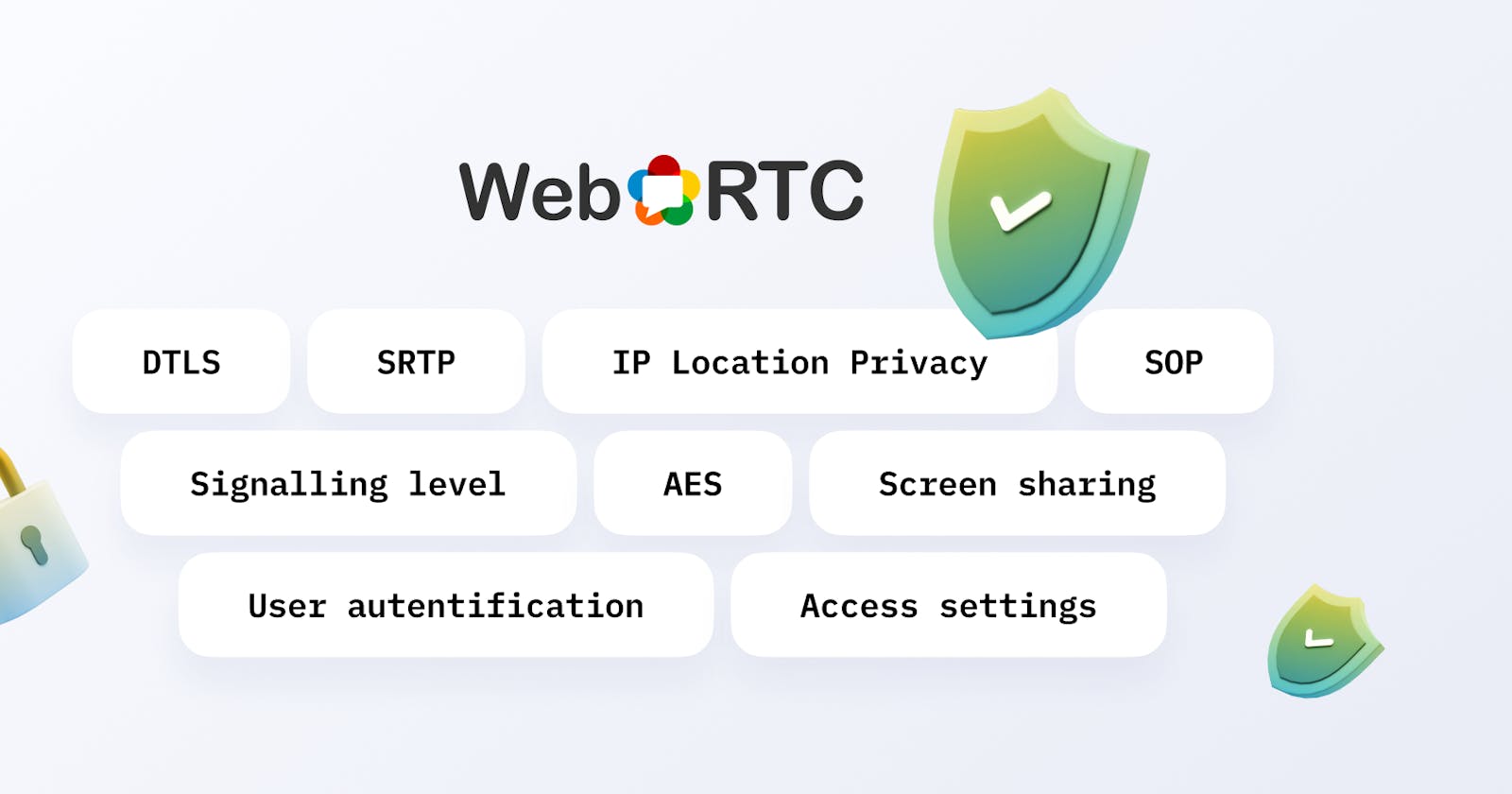

WebRTC security measures consist of 3 parts:

- those offered by WebRTC,

- those provided by the browser,

- and those programmed by the developer.

Let's discuss the measures of each kind, how they are circumvented - WebRTC security vulnerabilities, and how to protect from them.

What is WebRTC?

WebRTC - Web Real-Time Communications - is an open standard that describes the transmission of streaming audio, video, and content between browsers or other supporting applications in real-time.

WebRTC is an open-source project, so anyone can do WebRTC code security testing, like here.

WebRTC works on all Internet-connected devices:

- in all major browsers

- in applications for mobile devices - e.g. iOS, Android

- on desktop applications for computers - e.g., Windows and Mac

- on smartwatches

- on smart TV

- on virtual reality helmets

To make WebRTC work on these different devices, the WebRTC library was created.

What kind of security does WebRTC offer?

Data encryption other than audio and video: DTLS

The WebRTC library incorporates the DTLS protocol. DTLS stands for Datagram Transport Layer Security. It encrypts data in transit, including keys for transmitting encrypted audio and video. Here you can find the official DTLS documentation from the IETF - Internet Engineering Task Force.

DTLS does not need to be enabled or configured beforehand because it is built in. The video application developer doesn't need to do anything - DTLS in WebRTC works by default.

DTLS is an extension to the Transport Layer Security (TLS) protocol, which provides asymmetric encryption. Let's take the example of a paper letter and parcel to understand what symmetric and asymmetric encryptions are.

We exchange letters. A postal worker can open a normal letter, it can be stolen and read. We wanted nobody to be able to read the letters but us. You came up with a way to encrypt them, like swapping letters in the text. In order for me to decipher your letters, you will have to describe how to decipher your cipher and send it to me. This is symmetric encryption: both you and I encrypt the letters and we both have the decryption algorithm - the key.

The weakness of symmetric encryption is in the transmission of the key. It can also be read by the letter carrier or this very letter with the key can be stolen.

The invention of asymmetric encryption was a major mathematical breakthrough. It uses one key to encrypt and another key to decrypt. It is impossible to know the decryption key without having the encryption key. That's why an encryption key is called a public key - you can safely give it to anyone, it can only encrypt a message. The decryption key is called a private key - and it’s not shared with anyone.

Instead of encrypting the letter and sending me the key, you send me an open lock and keep the key. I write you a letter, put it in a box, put my open lock in the same box, and latch your lock on the box. I send it to you, and you open the box with your key, which has not passed to anyone else.

In symmetric encryption, keys are now disposable. For example, we made a call - the keys were created specifically for the call and deleted as soon as we hung up. Therefore, asymmetric and symmetric encryption are equally secure once the connection is established and keys are exchanged. The weakness of symmetric encryption is only that the decryption key has to be transferred.

But asymmetric encryption is much slower than symmetric encryption. The mathematical algorithms are more complicated, requiring more steps. That's why asymmetric encryption is used in DTLS only to securely exchange symmetric keys. The data itself is encrypted with symmetric encryption.

How to bypass DTLS?

Cracking the DTLS cipher is a complex mathematical problem. It’s not considered to be done in a reasonable time without a supercomputer - and probably not with one either. It’s more profitable for hackers to look for other WebRTC security vulnerabilities.

The only way to bypass DTLS is to steal the private key: steal your laptop or pick the password to the server.

In the case of video calls through a media server, the server is a separate computer that stores its private key. If you access it, you can eavesdrop and spy on the call.

It is also possible to access your computer. For example, you have gone out to lunch and left your computer on in your office. An intruder enters your office and downloads a file on your computer that will give him your private key.

But first of all, it's like stealing gas: to steal gas, you have to be sitting at the gas line. The intruder has to have access to the wires that transmit the information from you - or be on the same Wi-Fi network: sitting in the same office, for instance. But why go through all that trouble: you can simply upload a file to your computer that will write screen and sound and send it to the intruder. You may download such a malicious file from the Internet by accident yourself if you download unverified programs from unverified sites.

Second, this is not hacking DTLS encryption, but hacking your computer.

How to protect yourself from a DTLS vulnerability?

- Don’t leave your computer turned on without your password.

- Keep your computer's password safe. If you are the owner of a video program, keep the password from the server where it is installed safely. Change your password on a regular basis. Don't use the password that you use elsewhere.

- Don’t download untested programs.

- Don’t download anything from unverified sites.

Audio and video encryption: SRTP

DTLS encrypts everything but the video and audio. DTLS is secure but because of this, it’s slow. Video and audio are "heavy" types of data. Therefore, DTLS is not used for real-time video and audio encryption - it would be laggy. They are encrypted by SRTP - Secure Real-time Transport Protocol, which is faster but therefore less secure. The official SRTP documentation from the Internet Engineering Board.

How to bypass SRTP?

2 SRTP security vulnerabilities:

- Packet headers are not encrypted

SRTP encrypts the contents of RTP packets, but not the header. Anyone who sees SRTP packets will be able to tell if the user is currently speaking. The speech itself is not revealed, but it can still be used against the speaker. For example, law enforcement officials would be able to figure out if the user was communicating with a criminal.

- Cipher keys can be intercepted

Suppose users A and B are exchanging video and audio. They want to make sure that no one is eavesdropping. To do this, the video and audio must be encrypted. Then, if they are intercepted, the intruder will not understand anything. User A encrypts his video and audio. Now no one can understand them, not even B. A needs to give B the key so that B can decrypt the video and audio in his place. But the key can also be intercepted – that’s the vulnerability of SRTP.

How to defend against SRTP attacks?

- Packet headers are not encrypted

There is a proposed standard on how to encrypt packet headers in SRTP. As of October 2021, this solution is not yet included in SRTP; its status is that of a proposed standard. When it’s included in SRTP, its status will change to “approved standard”. You can check the status here, under the Status heading.

- Cipher keys can be intercepted

There are 2 methods of key exchange: 1) via SDES – Session Description Protocol Security Descriptions 2) via DTLS encryption

1) SDES doesn’t support end-to-end encryption. That is, if there is an intermediary between A and B, such as a proxy, you have to give the key to the proxy. The proxy will receive the video and audio, decrypt them, encrypt them back - and pass them to B. Transmission through SDES is not secure: it is possible to intercept decrypted video and audio from the intermediary at the moment when they are decrypted, but not yet encrypted back.

2) The key is no longer "heavy" video or audio. It can be encrypted with reliable DTLS - it can handle key encryption quickly, no lags. This method is called DTLS-SRTP hybrid. Use this method instead of SDES to protect yourself.

IP Address Protection - IP Location Privacy

The IP address is the address of a computer on the Internet.

What is the danger if an intruder finds out your IP address?

Think of IP as your home address. The thief can steal your passport, find out where you live, and come to break into your front door.

Once they know your IP, a hacker can start looking for vulnerabilities in your computer. For example, run a port check and find out what programs you have installed.

For example, it's a messenger. And there's information online that this messenger has a vulnerability that can be used to log onto your computer. A hacker can use it as in the case above: when you downloaded an unverified program and it started recording your screen and sound and sending them to the hacker. Only in this case, you didn't install anything yourself, you were careful. But the hacker downloaded this program to your computer through a messenger vulnerability. Messenger is just an example. Any program with a vulnerability on your computer can be used.

The other danger is that a hacker can use your IP address to determine where you are physically. This is how they stall in movies when negotiations with a terrorist happen to get a fix on their location.

How do I protect my IP address from intruders?

It’s impossible to be completely protected from this. But there are two ways to reduce the risks:

- Postpone the IP address exchange until the user picks up the phone. So, if you do not take the call, the other party will not know your address. But if you do pick up, they will. This is done by suppressing JavaScript conversations with ICE until the user picks up the phone.

ICE - Internet Connectivity Establishment: It describes the protocols and routes needed for WebRTC to communicate with the remote device. Read more about ICE in our article WebRTC in plain language.

The downside: Remember, social networks and Skype show you who's online and who's not? You can't do that.

- Don’t use p2p communication, but use an intermediary server. In this case, the interlocutor will only know the IP address of the intermediary, not yours.

The disadvantage: All traffic will go through the intermediary. This creates other security problems like the one above about SDES.

If the intermediary is a media server and it’s installed on your server, it’s as secure as your server because it’s under your control. For measures to protect your server, see the SOP section below.

What security methods do browsers offer?

These methods are only for web applications running in a browser. For example, this doesn’t apply to mobile applications on WebRTC.

SOP - Same Origin Policy

When you open a website, the scripts needed to run that site are downloaded to your computer. A script is a program that runs inside the browser. Each script is downloaded from somewhere - the server where it is physically stored. This is its origin. One site may have scripts from different origins. SOP means that scripts downloaded from different origins do not have access to each other.

For example, you have a video chat site. It has your scripts - they are stored on your server. And there are third-party scripts - for example, a script to check if the contact form is filled out correctly. Your developer used it so he didn't have to write it from scratch himself. You have no control over the third-party script. Someone could hack it: gain access to the server where it is stored and make that script, for example, request access to the camera and microphone of users on all sites where it is used. Third-party scripting attacks are called XSS - cross-site scripting.

If there were no SOP, the third-party script would simply gain access to your users' cameras and microphones. Their conversations could be viewed and listened to or recorded by an intruder.

But the SOP is there. The third-party script isn't on your server - it's at another origin. Therefore, it doesn't have access to the data on your server. It can't access your user's camera and microphone.

But it can show the user a request to give him access to the camera and the microphone. The user will see the "Grant access to camera and microphone?" sign again, even though he has already granted access. This will look strange, but the user may give access thinking that he’s giving access to your site. Then the attacker would still be able to watch and listen to his conversations. The protection of the SOP is that without the SOP, access would not be requested again.

Access to the camera and microphone is just the most obvious example. The same goes for screen sharing, for example.

It's even worse with text chat. If there were no SOP, it would be possible to send this malicious script to the chat room. Scripts aren’t displayed in chat: the user would see a blank message. But the script would be executed - and the attacker could watch and listen to his conversations and record them. With SOP the script will not run - because it is not on your server, but in another origin.

How to bypass SOP and how to protect yourself

- Errors in CORS - Cross-Origin Resource Sharing

Complex web applications cannot work comfortably in an SOP environment. Even components of the same website can be stored on different servers - in different origins. Asking the user for permission every time would be annoying.

This is why developers are given the ability to add exceptions to the SOP - Cross-Origin Resource Sharing (CORS). The developer must list the origins-exceptions separated by a comma, or put "" to allow all. During the development process, there are often different versions of the site: the production version - available to real users, pre-production - available to the site owner for the final testing before posting to production, test - for testing by testers, the developer's version. URLs of all versions are different. The programmer has to change the URL of exceptions from the SOP each time he transfers the version to another version. There is a temptation to put "" to speed up. He can forget to replace the "*" in the list of exceptions in the production version, and then the SOP for your site will not work. It will become vulnerable to any third-party scripts.

How to protect against errors in CORS

To the developer - check for vulnerabilities from XSS: write exceptions from SOP, instead of "disabling" it by typing "*".

To the user - revoke camera and microphone accesses that are no longer needed. The browser stores a list of permissions: to revoke, you must uncheck the box.

- Replacing the server your server connects to via WebSocket

What is WebSocket?

Remember the CORS, the SOP exception that you have to set manually? There is another exception that is always in effect by default. This is WebSocket.

Why such an insecure technology, you ask? For real-time communication. The request technology that SOP covers doesn't allow for real-time communication, because it's one-way.

Imagine you're driving in a car with a child in the back seat. You are server-side, the child is the client-side. The child asks you periodically: are we there? You answer "no." In inquiry technology, when you finally arrive, you will not be able to say "we have arrived" to the child yourself. You have to wait for the child to ask. WebSocket allows you to say "arrived" yourself without having to wait for the question.

Examples from the field of programming: video and text chats. If WebSocket didn't exist, the client side would have to periodically ask, "do I have incoming calls?", "do I have messages?" Even if you ask once every 5 seconds, it's already a delay. You can ask more often - once a second, for example. But then the load on the server increases, the server must be significantly more powerful, that is, more expensive. This is inefficient and this is why WebSocket was invented.

What is the vulnerability of WebSocket

WebSocket is a direct connection to the server. But which one? Well, normally yours. But what if the intruder replaces your server address with his own? Yes, his server address would not be at your origin. But the connection is through WebSocket, so the SOP won't check it and won't protect it.

What can happen because of this substitution? On the client-side, your text or video chat will receive a new message or an incoming call. It will appear to be one person writing or calling, but in fact, it will be an intruder. You may receive a message from your boss, such as "urgently send... my Gmail account password, the monthly earnings report" - whatever. You might get a call from an intruder pretending to be your boss, asking you to do something. If the voices are similar, you won't even think that it might not be him - because the call is displayed as if it was from him.

How this can be done is a creative question. You have to look for vulnerabilities in the site. An example is XSS. You have a site with a video chat and a contact form, the messages from which are displayed in the admin panel of the site. A hacker sends the "replace the server address with this one" script to the contact form. The script appears in the admin panel along with all the messages from the contact form. Now it's "inside" your site - it has the same source. SOP will not stop it. The script is executed, the server address is changed to this one.

How to protect against spoofing the server that your server connects to via WebSocket

- Filter any data from users to scripts

If the developer programmed not to accept scripts from users - the message from the contact form in the example above would not be accepted, and an intruder would not be able to spoof your server into his own on a WebSocket connection this way. You should always filter user messages for scripts, this will protect against server spoofing in WebSocket as well as many other problems.

- Program a check that the connection through WebSocket is made to the correct origin

For example, generate a unique codeword for each WebSocket connection. This codeword is not sent over the WebSocket, which means the SOP works. If a request for a codeword is sent to a third-party source, SOP will not allow it to be sent - because the third-party server is of a different origin.

- Code obfuscation

To obfuscate code is to make it incomprehensible while keeping it working. Programmers write code clearly - at least they should :) So that if another developer adopts the code, he can make out in this code which part does what and work with this code. For example, programmers clearly name variables. The server address which is to be connected to via WebSocket is also a variable and will be named clearly, e.g. "server address for WebSocket connection". After running the code through obfuscation, this variable will be called, for example, "C". An outside intruder programmer will not understand which variable is responsible for what.

The mechanism of codeword generation is stored in the code. Cracking it is an extra effort, but it is possible. If you make the code unreadable, the intruder won't be able to find this mechanism in the code.

- Server hacking

If your server gets hacked, a malicious third-party script can be "put" on your server. The SOP will not help: Your server is now the source of this script. This script will be able to take advantage of the camera and microphone access that the user has already given to your site. The script still won't be able to send the recording to a third-party server, but it doesn't need to. The attacker has access to your server: he can simply take the recording from there.

How a server can be hacked is not among WebRTC security issues, so it’s beyond the scope of this article. For example, an attacker could simply steal your server username and password.

How to protect yourself from the server hack

The most obvious thing is to protect the username-password.

If your server is hacked, you can’t protect yourself from the consequences. But there are ways to make life difficult for the attacker.

Store all user content in encrypted form on the server. For example, records of video conferences. The server itself should be able to decrypt them. So, the server stores the decryption method. If the server is hacked, the attacker can find it. But that's going to take time. He won't be able to just swing by the server, copy the conversations and leave. The time he will have to spend on the compromised server will increase. This gives the server owner time to take some measures, such as finding the active session of the connected intruder and disabling him as an administrator and changing the server password.

Ideally, do not store user content on the server. For example, allow recording conferences, but don't save them on the server, let the user download the file. Once the file is downloaded - only the user has it, it's not on the server.

Give the user more options to protect himself - develop notifications in the interface of your program. We don't recommend this method for everyone, because it's inconvenient for the user. But if you are developing video calls for a bank or a medical institution, security is more important than convenience:

Ask for access to the camera and microphone before each call.

If your site gets hacked and they want to call someone on behalf of the user without their permission, the user will get a notification: "Do you want the camera and microphone access for the call?" He didn't initiate that call, so it's likely to keep the user safe: he'll click "no." It's safe, but it's inconvenient. What percentage of users will go to a competitor instead of clicking "allow" before every call?

- Ask for access to the camera and microphone to call specific users.

Calling a user for the first time? See a notification saying "Allow camera and microphone access for calls to ...Chris Baker (for example)?". It's less inconvenient for the user if they call the same people often. But it still loses in convenience to programs that ask for access only once.

Use a known browser from a trusted source

What is it?

The program you use to visit websites. Video conferencing works in the browser. When you use it, you assume the browser is secure.

How do attackers use the browser?

By injecting malicious code that does what the hacker wants.

How to protect yourself?

- Don't download browsers from untrusted sources. Here's a list of official sites for the most popular browsers:

Firefox Opera Google Chrome Safari Microsoft Edge

- Don't use unknown browsers Just like with the links. If a browser looks suspicious, don’t download it. You can give a list of safe browsers to the users of your web application. Although, if they are on your site, it means that they already use some browser... 😊

What security measures should the developer think about?

WebRTC was built with security in mind. But not everything depends on WebRTC because it's only a part of your program that is responsible for the calls. If someone steals the user's password, WebRTC won't protect it, no matter how secure the technology is. Let's break down how to make your application more secure.

Signaling Layer

The Signaling Layer is responsible for exchanging the data needed to establish a connection. How connection establishment works, the developer writes - it happens before WebRTC and all its encryption comes into play. Simply put: When you're sitting on a video call site and a pop-up pops up, "Call for you, accept/reject?" Before you hit "accept" it's a signal layer, establishing a connection.

How can attackers use the signaling layer and how can they protect themselves?

There are many possibilities to do this. Let's look at the 3 primary ones: Man-in-the-Middle attack, Replay attack, Session hijacking.

- MitM (Man-in-the-Middle) attack

In the context of WebRTC, this is the interception of traffic before the connection is established - before the DTLS and SRTP encryption described above comes into effect. An attacker sits between the callers. He can eavesdrop and spy on conversations or, for example, send a pornographic picture to your conference - this is called zoombombing.

This can be any intruder connected to the same Wi-fi or wired network as you - he can watch and listen to all the traffic going on your Wi-fi network or on your wire.

How to protect yourself?

Use HTTPS instead of HTTP. HTTPS supports SSL/TLS encryption throughout the session. Man-in-the-middle will still be able to intercept your traffic. But the traffic will be encrypted and he won't understand it. He can save it and try to decrypt it, but he won't understand it right away.

SSL - Security Sockets Layer - is the predecessor to TLS. It turns HTTP into HTTPS, securing the site. Users used to go to HTTP and HTTPS sites without seeing the difference. Now HTTPS is a mandatory standard: developers have to protect their sites with SSL certificates. Otherwise, the browsers won't let the user go to the site: they'll show that dreaded "your connection is not secured" message - and only by clicking "more" can the user click "still go to the site". Not all users will click "go anyway", that's why all developers now add SSL certificates to sites.

- Replay attack

You have protected yourself from Man-in-the-middle with HTTPS. Now the attacker hears your messages but does not understand them. But he hears them! And therefore, he can repeat - replay. For example, you gave the command "transfer 100 dollars". And the attacker, though he does not understand it, repeats "transfer 100 dollars" - and without additional protection, the command will be executed. From you will be written off 100 dollars 2 times, and the second 100 dollars will be sent in the same place where the first.

How to protect yourself?

Set a random session key. This key will be active during one session and cannot be used twice. "Send $100. ABC". If an intruder repeats "transfer $100. ABC" - it will become clear that the message is repeated and it should not be executed. This is exactly what we did in the NextHuddle project - a video conferencing service for educational events. NextHuddle is designed for an audience of 5000 users and 25 streamers.

- Session hijacking

Session hijacking is when a hacker takes over your Internet session. For example, you call the bank. You say who you are, your date of birth, or a secret word. "Okay, we recognize you. What do you want?" - and then the intruder takes the phone receiver from you and tells them what he wants.

How do you protect yourself?

Use HTTPS. You have to be man-in-the-middle to hijack the session. So what protects against man-in-the-middle also protects against session hijacking.

Selecting the DTLS Encryption Bit

DTLS is an encryption protocol. The protocol has encryption algorithms such as AES. AES has bits - 128 or more complex and protected 256. In WebRTC they are chosen by the developer. Make sure that the bit selected for AES is the one that gives the highest security, 256.

You can read how to do this in the Mozilla documentation, for example. A certificate is generated and when you create a peer connection you pass on this certificate.

Authentication and member tracking

The task of the developer is to make sure that everyone who enters the video conference room is authorized to do so.

Example 1 - private rooms: for example, a paid video lesson with a teacher. The developer should program a check: has the user paid for the lesson? If he has paid, let him in, and if he hasn't, don't let him in.

This seems obvious, but we have encountered many cases where you can copy the URL of such a paid conference and send it to anyone and he goes and visits the conference even though he did not pay for the lesson.

Example 2 - open rooms: for example, business video conferences of the "join without registration" type. This is done for convenience: when you don't want to make a business partner waste time and register. You just send him a link, he follows it and gets in the conference.

If there are not so many participants, the owner himself will see if someone has joined too much. But if a lot do, the owner won’t notice. One way out is for the developer to program the manual approval of new participants by the owner of the conference.

Example 3 - helping the user to protect his login and password. If an intruder gets hold of a user's login and password, he will be able to log in with it.

Program the login through third-party services. For example, social networks, Google login, or Apple login on mobile devices. You may not use a password, but send a login code to your email or phone. This will reduce the number of passwords a user has to keep. The thief would not need to steal a password from your program, but a password from a third-party service such as a social network, mobile account, or email.

You can use two ways at once - for example, the username and password from your program plus a confirmation code on your phone. Then, in order to hack your account, you will need to steal two passwords instead of one.

Not all users will want to log in that hard and long to call. A choice can be given: one login method or two. Those who care about security will choose two, and be grateful.

Access Settings

Let's be honest - we don't always read the access settings dialogs. If the user is used to clicking OK, the application may get permissions he didn't want to give.

The other extreme measure, the user may delete the app if they don't immediately understand why they're being asked for access.

The solution is simple - show care. Write clearly what permissions the user gives and why.

For example, in mobile applications: before showing a standard pop-up requesting access to geolocation, show an explanation like "People in our chat room call nearby. Allow geolocation access, so we can show you the people nearby.

Screen sharing

Any app that gives a screen demo feature should have a warning about exactly what the user is showing.

For example, before a screencasting session, when the user selects the area of the screen to be shown. Make a reminder notification so that the user doesn't accidentally show a piece of the screen with data they don't want to show. "What do you want to share?" - and options: " - entire screen, - only one application - select which one, such as just the browser."

If you gave the site permission to do a screen share, and the site gets hacked, the hacker can send you a script that opens some web page in your browser while you're doing the screen share. For example, he knows how links to social networking posts are formed. He has formed a link to your correspondence with a particular person that he wants to see. He's not logged in to your social network - so when he follows that link, he won't see your correspondence. But if he's hacked into a site that you've allowed to show the screen, the next time you show the screen there he'll execute a script that will open that page with the correspondence in your browser. You will rush to close it, but too late: the screencasting has already passed it to the intruder. The protection against this is the same as against hacking the server - keep your passwords safe. But it is difficult to do. What’s easier is not to hack the site, but to send a fake link requesting screen sharing.

Where to read more about WebRTC security

There are many articles on the internet about security in WebRTC. There are 2 problems with them:

- They merely express someone's subjective opinion. Our article is no exception. The opinion may be wrong.

- Most articles are technical: it might be difficult for somebody who’s not a programmer to understand.

How to solve these problems?

Use the scientific method of research: read primary sources, the publications confirmed by someone's authority. In scientific work, these are publications in Higher Attestation Commission (HAC) journals - before publication in them, the work must be approved by another scientist from the HAC. In IT these are the W3C - World Wide Web Consortium and the IETF - Internet Engineering Task Force. The work is approved by technical experts from Google, Mozilla, and similar corporations before it is published.

- WebRTC security considerations from the W3C specification - in brief

- WebRTC security considerations from the IETF - details on threats, a bit about protecting against them

- IETF's WebRTC security architecture - more on WebRTC threat protection The documentation above is correct but written in such technical language that a non-technical person can't figure it out. Most of the articles on the internet are the same way. That's why we wrote this one. After reading it:

- The basics will become clear to you (hopefully). Maybe this will be enough to make a decision.

- If not, the primary sources will be easier for you to understand. Cooperate with your programmer - or reach out to us for advice.

Conclusion

WebRTC itself is secure. But if the developer of a WebRTC-based application doesn’t take care of security, his users will not be safe.

For example, in WebRTC all data except video and audio is encrypted by DTLS, and audio and video are encrypted by SRTP. But many WebRTC security settings are chosen by the developer of the video application: for example, how to transfer keys to SRTP - by DTLS top-level security or not.

Furthermore, WebRTC is only a way to transmit data when the connection is already established. What happens to users before the connection is established is entirely up to the developer: as he programs it, so it will be. What SOP exceptions to set, how to let users in a conference, whether to use HTTPS - all this is up to the developer.

Write to us, we'll check your video application for security.

Check out our Instagram - we post projects there, most of which were made on WebRTC.