Have you ever thought about how videos are processed? What about applying effects? In this AVFoundation tutorial, I’ll try to explain video processing on iOS in simple terms. This topic is quite complicated yet interesting. You can find a short guide on how to apply the effects down below.

Core Image

Core Image is a framework by Apple for high-performance image processing and analysis. Classes CIImage, CIFilter, and CiContext are the main components of this framework.

With Core Image, you can link different filters together (CiFilter) in order to create custom effects. You can also create the effects that work on the GPU (graphic processor) which will move some load from the CPU (central processor), thus increasing the app speed.

AVFoundation

AVFoundation is a framework for work with media files on iOS, macOS, watchOS, and tvOS. By using AVFoundation, you can easily create, edit, and play QuickTime films and MPEG-4 (MP4) files. You can also play HLS streams (read more about HLS here) and create custom functions to work with video and audio files, such as players, editors, etc.

Adding an effect

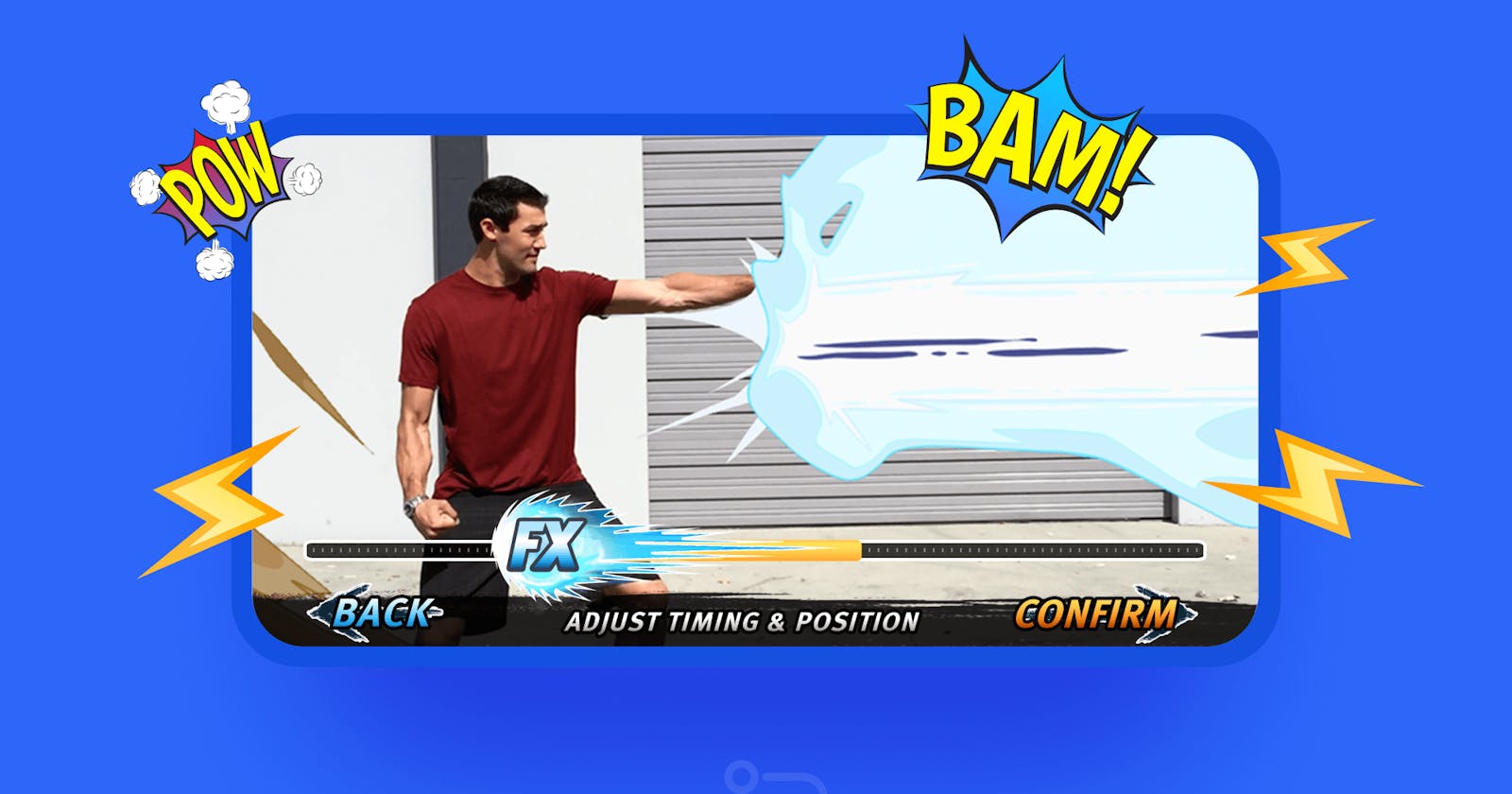

Let’s say you need to add an explosion effect to your video. What do you do?

First, you’ll need to prepare three videos: the main one where you’ll apply the effect, the effect video with an alpha channel, and the effect video without an alpha channel.

An alpha channel is an additional channel that can be integrated into an image. It contains information about the image’s transparency and can provide different transparency levels, depending on the alpha type.

We need an alpha channel to not let the video with an effect overlap the main one. This is the example of a picture with the alpha channel and without it:

Transparency goes down as the color gets whiter. Therefore, black is fully transparent whereas white is not transparent at all.

After applying a video effect, we’ll only see the explosion itself (the white part of an image on the right), and the rest will be transparent. It will allow us to see the main video where we apply the effect.

Then, we need to read the three videos at the same time and combine the images, using CIFilter.

First, we get a link to CVImageBuffer via CMSampleBuffer. We need it to control different types of image data. CVImageBuffer is derived from CVPixelBuffer which we’ll need later. We get CIImage from CVImageBuffer. It looks something like this in the code:

CVImageBufferRef imageRecordBuffer = CMSampleBufferGetImageBuffer(recordBuffer);

CIImage *ciBackground = [CIImage imageWithCVPixelBuffer:imageRecordBuffer];

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(buffer);

CIImage *ciTop = [CIImage imageWithCVPixelBuffer:imageBuffer];*

CVImageBufferRef imageAlphaBuffer = CMSampleBufferGetImageBuffer(alphaBuffer);

CIImage *ciMask = [CIImage imageWithCVPixelBuffer:imageAlphaBuffer];

After receiving CIImage for each one of the three videos, we need to compile them using CIFilter. The code will look roughly like this:

CIFilter *filterMask = [CIFilter filterWithName:@"CIBlendWithMask" keysAndValues:@"inputBackgroundImage", ciBackground, @"inputImage", ciTop, @"inputMaskImage", ciMask, nil];

CIImage *outputImage = [filterMask outputImage];

Once again we’ve received CIImage but this time it consists of the three CIImages that we got before. Now, we proceed to render the new CIImage in CVPixelBufferRef using CIIContext. The code will look roughly like this:

CVPixelBufferRef pixelBuffer =[self.contextEffect renderToPixelBufferNew:outputImage];

Now, we have a finalized pixel buffer. We need to add it to the video sample buffer, and we’ll receive a video with the effect after that.

[self.writerAdaptor appendPixelBuffer:pixelBuffer withPresentationTime:CMTimeMake(self.frameUse, 30)]

The effect is successfully added to the video here. With that being said, the work was completed using the GPU, which helped us take the load off the CPU, therefore increase the app speed.

Conclusion

Adding effects to videos in iOS is quite a complicated task, but it can be done if you know how to use basic frameworks for work with media in iOS. If you want to learn more about it, feel free to get in touch with us via the Contact us form!